Meta rolled out its much-hyped Llama 4 fashions this previous weekend, touting massive efficiency positive aspects and new multimodal capabilities. However the rollout hasn’t gone as deliberate. What was alleged to mark a brand new chapter in Meta’s AI playbook is now caught up in benchmark dishonest accusations, sparking a wave of skepticism throughout the tech neighborhood.

Llama 4 Hits the Scene—Then the Headlines

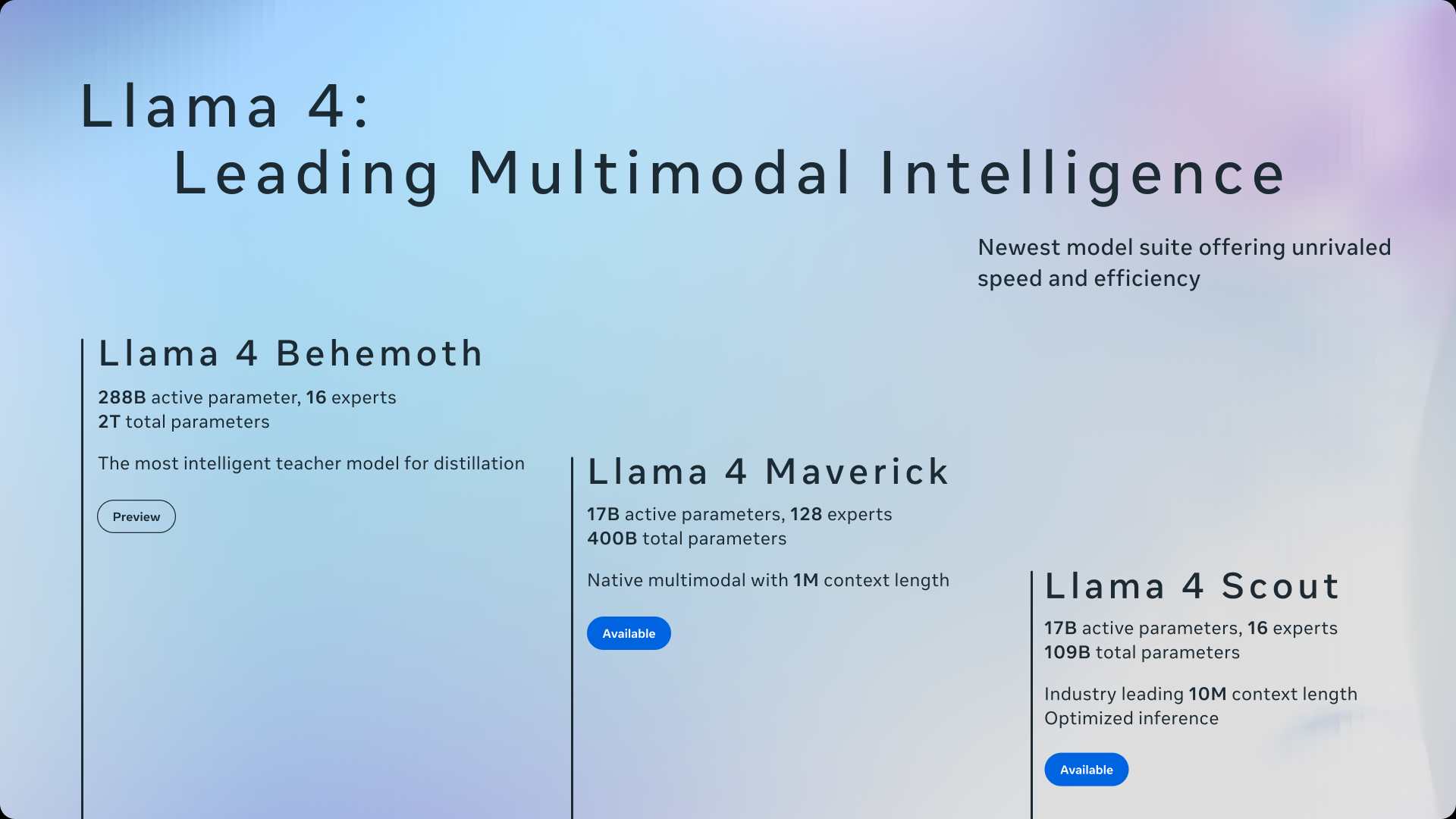

Meta launched three fashions below the Llama 4 title: Llama 4 Scout, Llama 4 Maverick, and the still-training Llama 4 Behemoth. In line with Meta, Scout and Maverick are already obtainable on Hugging Face and llama.com, and built-in into Meta AI merchandise throughout Messenger, Instagram Direct, WhatsApp, and the online.

Scout is a compact 17B-parameter mannequin constructed with 16 specialists and able to becoming on a single NVIDIA H100 GPU. Meta claims it outperforms Mistral 3.1, Gemini 2.0 Flash-Lite, and Gemma 3 on broadly reported benchmarks. Maverick, one other 17B-parameter mannequin however with 128 specialists, is claimed to beat GPT-4o and Gemini 2.0 Flash—whereas matching DeepSeek v3 in reasoning and code technology, all with far fewer parameters.

These fashions have been distilled from Meta’s largest and most formidable effort but—Llama 4 Behemoth, a 288B-parameter mannequin nonetheless in coaching. Meta says Behemoth already tops GPT-4.5, Claude Sonnet 3.7, and Gemini 2.0 Professional on a variety of STEM benchmarks.

All of it sounds spectacular. However quickly after the launch, questions started to pile up.

The Benchmark Downside

“We developed a brand new coaching approach which we discuss with as MetaP that permits us to reliably set essential mannequin hyper-parameters equivalent to per-layer studying charges and initialization scales. We discovered that chosen hyper-parameters switch properly throughout totally different values of batch measurement, mannequin width, depth, and coaching tokens. Llama 4 allows open supply fine-tuning efforts by pre-training on 200 languages, together with over 100 with over 1 billion tokens every, and general 10x extra multilingual tokens than Llama 3,” Meta mentioned in a blog post.

Llama 4 Maverick, the extra highly effective of the 2 launched fashions, was on the middle of the controversy. Meta showcased its efficiency on LM Area, however critics seen one thing unusual—the model examined wasn’t the identical as the general public launch. It seems Meta used a custom-tuned model for benchmarking, resulting in claims that the outcomes have been padded.

Ahmad Al-Dahle, Meta’s VP of Generative AI, denied any foul play. He mentioned the corporate didn’t prepare on the check units and that any inconsistencies have been simply platform-specific quirks. Nonetheless, the injury was carried out. Social media erupted, with posters accusing Meta of “benchmark hacking” and manipulating check circumstances to make Llama 4 look stronger than it’s.

Contained in the Accusations

An nameless consumer claiming to be a former Meta engineer posted on a Chinese language discussion board, alleging that the crew behind Llama 4 adjusted post-training datasets to get higher scores. That publish triggered a firestorm on X and Reddit. Customers began connecting the dots—speaking about inside check discrepancies, alleged strain from management to push forward regardless of identified points, and a normal feeling that optics have been prioritized over accuracy.

The time period “Maverick ways” started circulating, shorthand for taking part in unfastened with testing protocols to chase headlines.

Meta’s Response and What’s Lacking

Meta addressed the considerations in an April 7 interview with TechCrunch, calling the accusations false and standing by the benchmarks. However critics say the corporate hasn’t supplied sufficient proof to again its claims. There’s no detailed methodology or whitepaper, and no entry to the uncooked testing knowledge. In an business the place scrutiny is rising, that silence is making issues worse.

Why It Issues

Benchmarks are an enormous deal in AI. They assist builders, researchers, and corporations evaluate fashions on impartial floor. However the system isn’t bulletproof—check units might be overfitted, and outcomes might be massaged. That’s why transparency issues. With out it, belief erodes quick.

Meta says Llama 4 affords “best-in-class” efficiency, however proper now, a bit of the neighborhood isn’t shopping for it. And for a corporation that’s betting massive on AI as a core pillar of its future, that form of doubt is difficult to shake.

The Greater Image

This isn’t nearly Meta. There’s a rising concern throughout the AI house that benchmark outcomes have gotten extra about advertising and marketing than science. The Llama 4 episode is simply the newest instance of how firms can get known as out—loudly—when the numbers don’t add up.

Whether or not these accusations maintain up stays to be seen. For now, Meta’s statements are standing in opposition to a flood of hypothesis. The corporate has formidable plans for Llama 4, and the fashions themselves could also be stable. However the rollout has raised extra questions than it answered—and people questions aren’t going away till we see extra transparency.

Llama 4 might find yourself being a serious win for Meta. Or it might be remembered because the launch that sparked one other spherical of belief points in AI.

🚀 Need Your Story Featured?

Get in entrance of 1000’s of founders, traders, PE corporations, tech executives, determination makers, and tech readers by submitting your story to TechStartups.com.